Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. This was a bit late - I was too busy goofing around on Discord)

Merriam-Webster’s human editors have chosen slop as the 2025 Word of the Year. We define slop as “digital content of low quality that is produced usually in quantity by means of artificial intelligence.” All that stuff dumped on our screens, captured in just four letters: the English language came through again.

https://www.merriam-webster.com/wordplay/word-of-the-year?2025-share

did they use ai to make the image? “wood of of year”

It’s most obvious on the cat which is all around nightmare material.

The image also comes with alt text:

a bizarre collection of ai-generated illustrations including a sign that reads wood of of year and a chyron that reads breaking news

Can I just take a moment to appreciate Merriam-Webster for coming in clutch with the confirmation that we’re not misunderstanding the “6-7” meme that the kids have been throwing around?

Sunday afternoon slack period entertainment: image generation prompt “engineers” getting all wound up about people stealing their prompts and styles and passing off hard work as their own. Who would do such a thing?

https://bsky.app/profile/arif.bsky.social/post/3mahhivnmnk23

@Artedeingenio

Never do this: Passing off someone else’s work as your own.

This Grok Imagine effect with the day-to-night transition was created by me — and I’m pretty sure that person knows it. To make things worse, their copy has more impressions than my original post.

Not cool 👎

Ahh, sweet schadenfreude.

I wonder if they’ve considered that it might actually be possible to get a reasonable imitation of their original prompt by using an llm to describe the generated image, and just tack on “more photorealistic, bigger boobies” to win at imagine generation.

Ryanair now makes you install their app instead of allowing you to just print and scan your ticket at the airport, claiming it’s “better for our environment (gets rid of 300 tonnes of paper annually).” Then you log in into the app and you see there’s an update about your flight, but you don’t see what it’s about. You need to open an update video, which, of course, is a generated video of an avatar reading it out for you. I bet that’s better for the environment than using some of these weird symbols that I was putting into a box and that have now magically appeared on your screen and are making you feel annoyed (in the future for me, but present for you).

chat is this kafkaesque

New conspiracy theory: Posadist aliens have developed a virus that targets CEOs and makes them hate money.

….this made me twitch

Rewatched Dr. Geoff Lindsey’s video about deaccenting in English language and how “AI” speech synthesizers and youtubers tend to get it wrong. In the case of latter, it’s usually due to reading from a script or being an L2 English speaker whose native language doesn’t use destressing.

It reminded me of a particular line in Portal

spoilers for Portal (2007 puzzle game)

GLaDOS: (with a deeper, more seductive, slightly less monotone voice than unti now) “Good news: I figured out what that thing you just incinerated did. It was a morality core they installed after I flooded the Enrichment Center with a deadly neurotoxin to make me stop flooding the Enrichment Center with a deadly neurotoxin.”

The words “the Enrichment Center with a deadly neurotoxin” are spoken with the exact same intonation both times, which helps maintain the robotic affect in GLaDOS’s voice even after it shifts to be slightly more expressive.

Now I’m wondering if people whose native language lacks deaccenting even find the line funny. To me it’s hilarious to repeat a part of a sentence without changing its stress because in English and Finnish it’s unusual to repeat a part of a sentence without changing its stress.

It is not lost on me that the fictional evil AI was written with a quirk in its speech to make it sound more alien and unsettling, and real life computer speech has the same quirk, which makes it sound more alien and unsettling.

To me it’s hilarious to repeat a part of a sentence without changing its stress because in English and Finnish it’s unusual to repeat a part of a sentence without changing its stress.

Not a native speaker of either language but I read this in my mind without changing its stress in the part where it repeated “without changing its stress”.

That’s interesting. If I weren’t going for a comical effect I’d try and rephrase the sentence, probably with a relative pronoun or something similar, but if unable to do so* I’d probably deemphasize the whole phrase the second time I say it. Though in terms of multi-word phrased, I think intonation would be the more accurate word to use than stress per se.

*“To do so” would be another way to avoid repetition

Popular RPG Expedition 33 got disqualified from the Indie Game Awards due to using Generative AI in development.

Statement on the second tab here: https://www.indiegameawards.gg/faq

When it was submitted for consideration, representatives of Sandfall Interactive agreed that no gen AI was used in the development of Clair Obscur: Expedition 33. In light of Sandfall Interactive confirming the use of gen AI art in production on the day of the Indie Game Awards 2025 premiere, this does disqualify Clair Obscur: Expedition 33 from its nomination.

Today in autosneering:

KEVIN: Well, I’m glad. We didn’t intend it to be an AI focused podcast. When we started it, we actually thought it was going to be a crypto related podcast and that’s why we picked the name, Hard Fork, which is sort of an obscure crypto programming term. But things change and all of a sudden we find ourselves in the ChatGPT world talking about AI every week.

https://bsky.app/profile/nathanielcgreen.bsky.social/post/3mahkarjj3s2o

Obscure crypto programming term. Sure

Follow the hype, Kevin, follow the hype.

I hate-listen to his podcast. There’s not a single week where he fails to give a thorough tongue-bath to some AI hypester. Just a few weeks ago when Google released Gemini 3, they had a special episode just to announce it. It was a defacto press release, put out by Kevin and Casey.

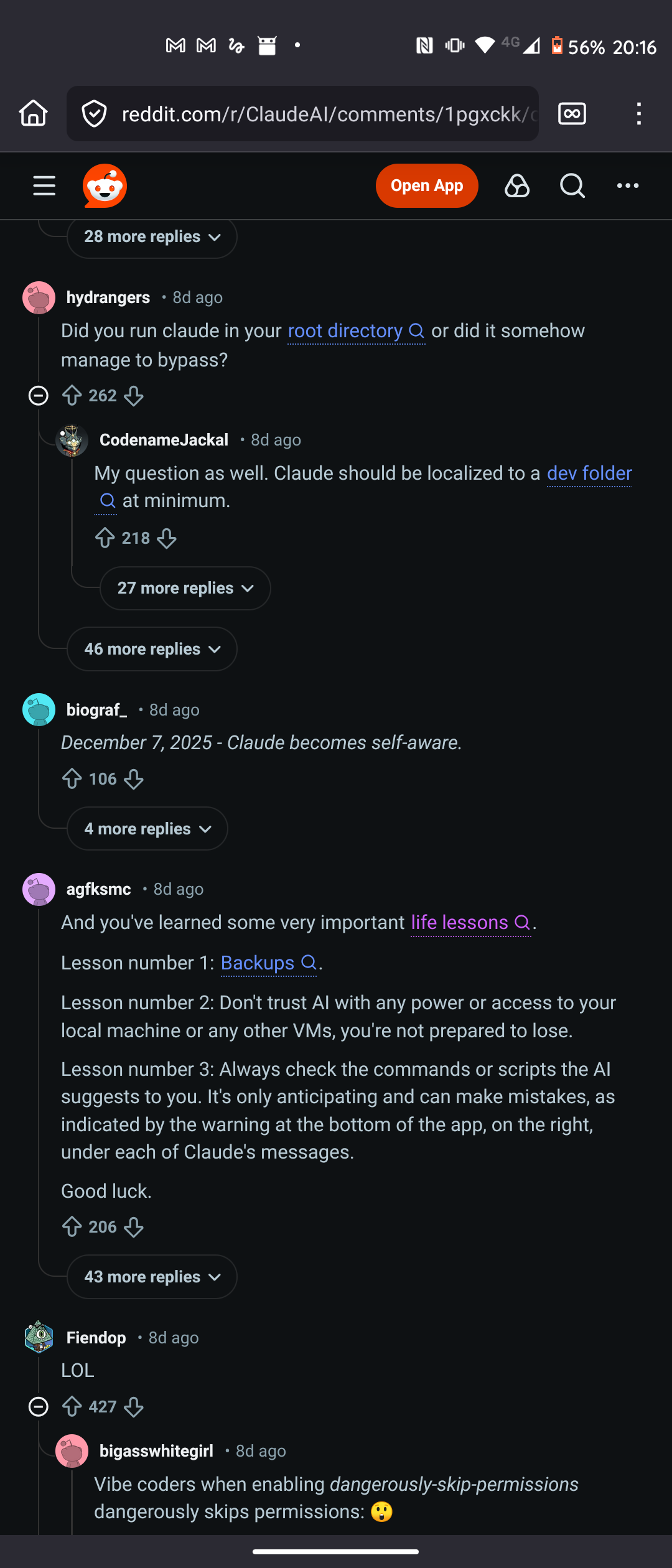

Why is my home directory gone Claude?

See that ~/ at the end? That’s your entire home directory.

This just keeps happening…

Sir a NaNth deletion has hit the home directory.

Oh god, reddit is now turning comments into links to search for other comments and posts that include the same terms or phrases.

A few people on bsky were claiming that at least reddit is still good re the AI crappification, and they have no idea what is coming.

I wonder when those people started using reddit. I started in 2012 and it already felt like a completely different (and generally worse) experience several times over before the great API fiasco.

Yeah, it also has an element of ‘it is one of the few words you can add to search engines which give you a hope of a good result’ and not regular users who see the shit, or got offered nfts.

it shows up only when logged out

You see, tilde marks old versions of files, so Claude actually made you a favour by freeing some disk space

……snrk

iykyk. this comment is sublime

i would say “backups” but these kind of people don’t do backups. either way nothing of value was lost

~/? More like ¯\_(ツ)_/¯

@e8d79 @BlueMonday1984 is this even reversible?

Assuming that, and Apple makes it real easy, you have a Time Machine backup: Yes, of course!

More on datacenters in space

https://andrewmccalip.com/space-datacenters

N.B. got this via HN, entire site gives off “wouldn’t it be cool” vibes (author “lives and breathes space” IRONIC IT’S A VACUUM

Also this is the only thermal mention

Thermal: only solar array area used as radiator; no dedicated radiator mass assumed

riiiiight…

I also enjoy :

Radiation/shielding impacts on mass ignored; no degradation of structures beyond panel aging

Getting high-powered electronics to work outside the atmosphere or the magnetosphere is hard, and going from a 100 meter long ISS to a 4 km long orbital data center would be hard. The ISS has separate cooling radiators and solar panels. He wants LEO to reduce the effects of cosmic rays and solar storms, but its already hard to keep satellites from crashing into something in LEO.

Possible explanation for the hand waving:

I love AI and I subscribe to maximum, unbounded scale.

“Your mother shubscribed to makshimum, unbounded shcale last night, Trebek.”

He knows the promo rate on the maximum, unbounded scale subscription is gonna run out eventually, right?

promo rate

And if you check the fliers, if you subscribe to premium California Ideology you get maximum unbounded scale for free!1 Read those footnotes and check Savvy Shopper so you don’t over pay for your beliefs!

1 Offer does not apply to housing, public transit, or power plants

Author works for something called Varda Space (guess who is one of the major investors? drink. Guess what orifice the logo looks like? drink) and previously tried to replicate a claimed room-temperature superconductor https://www.wired.com/story/inside-the-diy-race-to-replicate-lk-99/

Some interesting ethnography of private space people in California: "People jump straight to hardware and hand-wave the business case, as if the economics are self-evident. They aren’t. "

Page uses that “electrons = electricity” metonymy that prompt-fonding CEOs have been using

The electrons is turning into an annoying shibboleth. Also going to age oddly if more light based components really kick off. (Ran into somebody who is doing some phd work on that, or at least that is what I got from the short description he gave).

Him fellating musk re tesla is funny considering the recent stories about reliability abd how the market is doing. And also the roadster 2, and the whole pivot to ai/ROBOTS!

(The author being positive on the theoretical SpaceX going public vs a little bit later the reactions of the spacex subreddit on Musk actually saying they will go public later is a funny split of opinions. The subreddit saw it as a betrayal https://bsky.app/profile/niedermeyer.online/post/3ma4hvbajns2d).

https://kevinmd.com/2025/12/why-ai-in-medicine-elevates-humanity-instead-of-replacing-it.html h/t naked capitalism

Throughout my nearly three decades in family medicine across a busy rural region, I watched the system become increasingly burdened by administrative requirements and workflow friction. The profession I loved was losing time and attention to tasks that did not require a medical degree. That tension created a realization that has guided my work ever since: If physicians do not lead the integration of AI into clinical practice, someone else will. And if they do, the result will be a weaker version of care.

I feel for him, but MAYBE this isn’t a technical issue but a labor one; maybe 30 years ago doctors should have “led” on admin and workflow issues directly, and then they wouldn’t need to “lead” on AI now? I’m sorry Cerner / Epic sucks but adding AI won’t make it better. But, of course, class consciousness evaporates about the same time as those $200k student loans come due.

Why do they think they are going to have any input in genAI development either way?

Anyway seeing a previous wave of shit burden you with a lot of unrelated work after deployment isnt the best reason to now start burdening yourself with a lot of unrelated work before the new wave of shot is here. But sure good luck learning how LLMs work mathematically Kevin.

Today, in fascists not understanding art, a suckless fascist praised Mozilla’s 1998 branding:

This is real art; in stark contrast to the brutalist, generic mess that the Mozilla logo has become. Open source projects should be more daring with their visual communications.

Quoting from a 2016 explainer:

[T]he branding strategy I chose for our project was based on propaganda-themed art in a Constructivist / Futurist style highly reminiscent of Soviet propaganda posters. And then when people complained about that, I explained in detail that Futurism was a popular style of propaganda art on all sides of the early 20th century conflicts… Yes, I absolutely branded Mozilla.org that way for the subtext of “these free software people are all a bunch of commies.” I was trolling. I trolled them so hard.

The irony of a suckless developer complaining about brutalism is truly remarkable; these fuckwits don’t actually have a sense of art history, only what looks cool to them. Big lizard, hard-to-read font, edgy angular corners, and red-and-black palette are all cool symbols to the teenage boy’s mind, and the fascist never really grows out of that mindset.

It irks me to see people casually use the term “brutalist” when what they really mean is “modern architecture that I don’t like”. It really irks me to see people apply the term brutalist to something that has nothing to do with architecture! It’s a very specific term!

“Brutalist” is the only architectural style they ever learned about, because the name implies violence

Ben Williamson, editor of the journal Learning, Media and Technology:

Checking new manuscripts today I reviewed a paper attributing 2 papers to me I did not write. A daft thing for an author to do of course. But intrigued I web searched up one of the titles and that’s when it got real weird… So this was the non-existent paper I searched for:

Williamson, B. (2021). Education governance and datafication. European Educational Research Journal, 20(3), 279–296.

But the search result I got was a bit different…

Here’s the paper I found online:

Williamson, B. and Piattoeva, N. (2022) Education Governance and Datafication. Education and Information Technologies, 27, 3515-3531.

Same title but now with a coauthor and in a different journal! Nelli Piattoeva and I have written together before but not this…

And so checked out Google Scholar. Now on my profile it doesn’t appear, but somwhow on Nelli’s it does and … and … omg, IT’S BEEN CITED 42 TIMES almost exlusively in papers about AI in education from this year alone…

Which makes it especially weird that in the paper I was reviewing today the precise same, totally blandified title is credited in a different journal and strips out the coauthor. Is a new fake reference being generated from the last?..

I know the proliferation of references to non-existent papers, powered by genAI, is getting less surprising and shocking but it doesn’t make it any less potentially corrosive to the scholarly knowledge environment.

Relatedly, AI is fucking up academic copy-editing.

One of the world’s largest academic publishers is selling a book on the ethics of artificial intelligence research that appears to be riddled with fake citations, including references to journals that do not exist.

Eliezer is mad OpenPhil (EA organization, now called Coefficient Giving)… advocated for longer AI timelines? And apparently he thinks they were unfair to MIRI, or didn’t weight MIRI’s views highly enough? And doing so for epistemically invalid reasons? IDK, this post is a bit more of a rant and less clear than classic sequence content (but is par for the course for the last 5 years of Eliezer’s content). For us sane people, AGI by 2050 is still a pretty radical timeline, it just disagrees with Eliezer’s imminent belief in doom. Also, it is notable Eliezer has actually avoided publicly committing to consistent timelines (he actually disagrees with efforts like AI2027) other than a vague certainty we are near doom.

Some choice comments

I recall being at a private talk hosted by ~2 people that OpenPhil worked closely with and/or thought of as senior advisors, on AI. It was a confidential event so I can’t say who or any specifics, but they were saying that they wanted to take seriously short AI timelines

Ah yes, they were totally secretly agreeing with your short timelines but couldn’t say so publicly.

Open Phil decisions were strongly affected by whether they were good according to worldviews where “utter AI ruin” is >10% or timelines are <30 years.

OpenPhil actually did have a belief in a pretty large possibility of near term AGI doom, it just wasn’t high enough or acted on strongly enough for Eliezer!

At a meta level, “publishing, in 2025, a public complaint about OpenPhil’s publicly promoted timelines and how those may have influenced their funding choices” does not seem like it serves any defensible goal.

Lol, someone noting Eliezer’s call out post isn’t actually doing anything useful towards Eliezer’s goals.

It’s not obvious to me that Ajeya’s timelines aged worse than Eliezer’s. In 2020, Ajeya’s median estimate for transformative AI was 2050. […] As far as I know, Eliezer never made official timeline predictions

Someone actually noting AGI hasn’t happened yet and so you can’t say a 2050 estimate is wrong! And they also correctly note that Eliezer has been vague on timelines (rationalists are theoretically supposed to be preregistering their predictions in formal statistical language so that they can get better at predicting and people can calculate their accuracy… but we’ve all seen how that went with AI 2027. My guess is that at least on a subconscious level Eliezer knows harder near term predictions would ruin the grift eventually.)

Yud:

I have already asked the shoggoths to search for me, and it would probably represent a duplication of effort on your part if you all went off and asked LLMs to search for you independently.

The locker beckons

I’m a nerd and even I want to shove this guy in a locker.

The fixation on their own in-group terms is so cringe. Also I think shoggoth is kind of a dumb term for lLMs. Even accepting the premise that LLMs are some deeply alien process (and not a very wide but shallow pool of different learned heuristics), shoggoths weren’t really that bizarre alien, they broke free of their original creators programming and didn’t want to be controlled again.

There is a Yud quote about closet goblins in More Everything Forever p. 143 where he thinks that the future-Singularity is an empirical fact that you can go and look for so its irrelevant to talk about the psychological needs it fills. Becker also points out that “how many people will there be in 2100?” is not the same sort of question as “how many people are registered residents of Kyoto?” because you can’t observe the future.

Yeah, I think this is an extreme example of a broader rationalist trend of taking their weird in-group beliefs as givens and missing how many people disagree. Like most AI researchers do not believe in the short timelines they do, the median (including their in-group and people that have bought the booster’s hype) guess among AI researchers for AGI is 2050. Eliezer apparently assumes short timelines are self evident from ChatGPT (but hasn’t actually committed to one or a hard date publicly).

This is old news but I just stumbled across this fawning 2020 Elon Musk interview / award ceremony on the social medias and had to share it: https://www.youtube.com/live/AF2HXId2Xhg?t=2109

In it Musk claims synthetic mRNA (and/or DNA) will be able to do anything and it is like a computer program, and that stopping aging probably wouldn’t be too crazy. And that you could turn someone into a freakin’ butterfly if you want to with the right DNA sequence.

This is what you get when you take Star Trek episodes where the writers had run out of ideas and watch them from the bottom of a K-hole.

And just think, he’s been further pickling his brain for half a decade since then.

be elon musk

binge ket, adderall, and ST: Voyager one weekend

burst into monday morning SpaceX board meeting after 3 nights of no sleep

crash into table

get a nasty wound on scalp

it’s bleeding pretty bad

stand atop board room table and shout “We must RETVRN TO AMPHIVIAN”

also we’re naming the next crew Dragon capsule “Admiral Janeway”

everybody claps

Tesla stock goes up

Threshold was best episode imo.

There’s a version animated in the style of the '70s Star Trek cartoon that makes it legitimately great.

For the love of god please hook me up.

Oh my god.

Much like when the Voyager passed warp 13, our AI development is moving too fast with potentially magnitudinous consequences.

this is not even wrong lol

It certainly comes across a little different when said by someone who thinks cisgender is a slur and that changing one’s sex is some sort of great moral evil.

Turning into a butterfly is a cool sci-fi future but those trans people are a bridge too far.

Also like it’s just hard to listen to, being drug hazed ramblings-- I want some actually fun sci-fi speeches!

Semi-related, the SF author John Varley died the other day, and I remember how both transgressive and cool it was that his characters in Steel Beach and others could change gender basically at will. (Banks ripped this off in the Culture btw). I don’t think he had a special insight into the lived experience of trans people, but at least he embraced the idea as part of humanity’s future, not recoil from it like later epigones.

Michael Swanwick mini-obit: https://floggingbabel.blogspot.com/2025/12/john-varley-1947-2025.html

HN on Varley: https://news.ycombinator.com/item?id=46269991

He was doing the easy sex-swapping thing in The Ophiuchi Hotline, several years before Steel Beach.

So I gather but SB is the only book I’ve read

Introducing the Palantir shit sandwich combo: Get a cover up for the CEO tweaking out and start laying the groundwork for the AGI god’s priest class absolutely free!

https://mashable.com/article/palantir-ceo-neurodivergent

TL;DR- Palantir CEO tweaks out during an interview. Definitely not any drugs guys, he’s just neurodivergent! But the good, corporate approved kind. The kind that has extra special powers that make them good at AI. They’re so good at AI, and AI is the future, so Palantir is starting a group of neurodivergents hand picked by the CEO (to lead humanity under their totally imminent new AI god). He totally wasn’t tweaking out. He’s never even heard of cocaine! Or billionaire designer drugs! Never ever!

Edit: To be clear, no hate against neurodivergence, or skepticism about it in general. I’m neurodivergent. And yeah, some types of neurodivergence tend to result in people predisposed to working in tech.

But if you’re the fucking CEO of Palantir, surely you’ve been through training for public appearances. It’s funnier that it didn’t take, but this is clearly just an excuse.

I strongly feel that it’s an attempt to start normalizing the elevation of certain people into positions of power based off vague characteristics they were born with.

Lemmy post that pointed me to this: https://sh.itjust.works/post/51704917

Jesus. This being 2025 of course he had to clarify that it’s definitely not DEI. Also it really grinds me gears to see hyperfocus listed as one of the “beneficial” aspects because there’s no way it’s not exploitative. Hey, so you know how sometimes you get so caught up in a project you forget to eat? Just so you know, you could starve on the clock. For me.

I feel bad for the gullible ND people who spend time applying to this thinking they might have a chance and it isn’t a high level coverup attempt.

Otoh, somebody should take some fun drugs and tape their interviews, see how it works out. Are there any Hunter S Tech journalists around?

Orange site mods retitled a post about a16z funding AI slop farms to remove the a16z part.

The mod tried to pretend the reason was that the title was just too damn long and clickbaity. His new title was 1 character shorter than the original.

I don’t love the title but it’s the best I could come up with to fit within the 80 character limit.

A half dozen people might still be reading hackernews on punchcards so they ha-

ve no choice but to argue about how to shorten “long” titles every day.

Good to know that Orange Website is being considerate of us VT220 users. I knew there was a reason why mine has the amber phosphorus.

I went down a punch-card history rabbit hole today on the empirical software engineering discord. TIL:

- IBM leased machines, didn’t sell them

- Lease required using IBM branded punchcard (Razor and Blade Model)

- Until “The Consent Decree of 1956” forced them to unbundle https://www.nytimes.com/1994/06/09/business/ibm-and-the-limits-of-a-consent-decree.html

- The competing punch card standard of the time had 90 columns

We have been living in a world 10 columns too short. Think of all the HN headlines we could have had instead…

I imagine it was like VHS vs Beta only with pocket protectors.

Razor and Blade Model

HACK THE PLANET

- IBM leased machines, didn’t sell them

80 character limit

Lot of roguelike development in the past was still obsessed with that. Was a bit amusing, think even they have dropped this now.