OK. Very hot take.

…Computers can produce awful things. That’s not new. They’re tools that can manufacture unspeakable stuff in private.

That’s fine.

It’s not going to change.

And if some asshole uses it that way to damage others, you throw them in jail forever. That’s worked well enough for all sorts of tech.

The problem is making the barrier to do it basically zero, automatically posting it to fucking Twitter, and just collectively shrugging because… what? Social media is fair discourse? That’s bullshit.

The problem is Twitter more than Grok. The problem is their stupid liability shield.

Strip Section 230, and Musk’s lawyers would fix this problem faster than you can blink.

Without 230 its pretty clear that the web would die a messy death where people like Elon would be the only ones who could afford to offend anyone due to anyone with a few hundred or a few thousand being able to make you spend 30 or 40k defending themselves.

Got any other stupid ideas?

deleted by creator

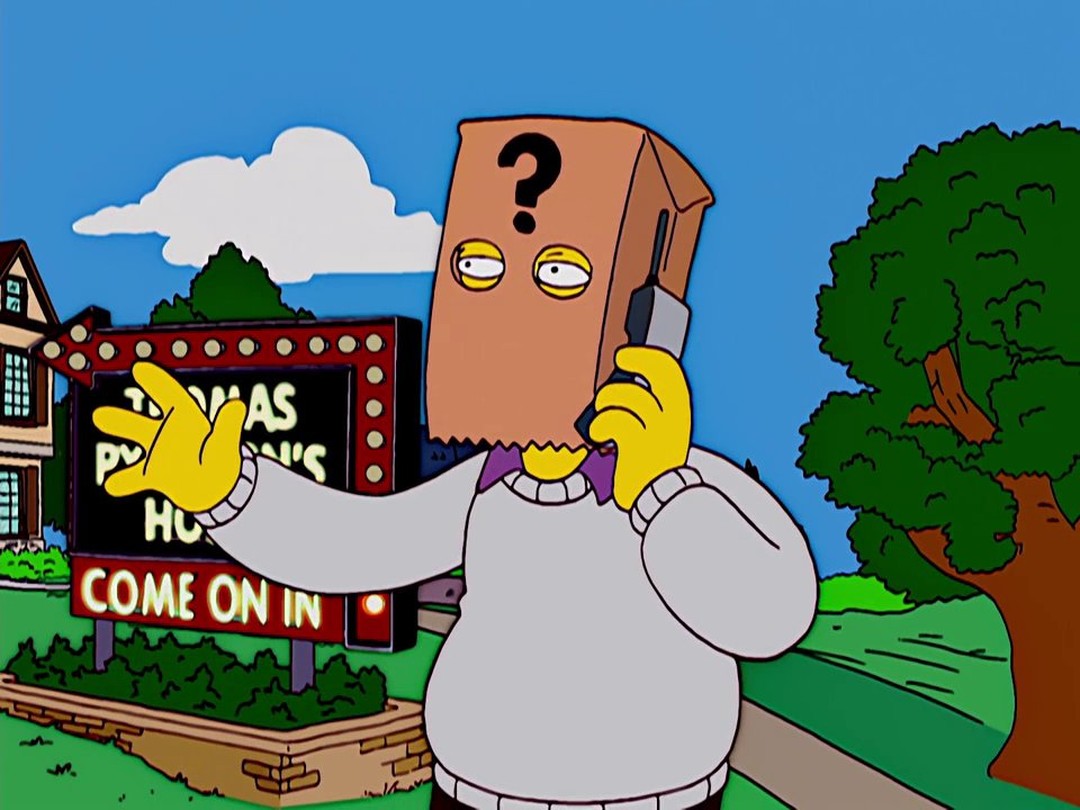

Why is the headline putting the blame on an inanimate program? If those X posters had used Photoshop to do this, the headline would not be “Photoshop edited Good’s body…”

Controlling the bot with a natural-language interface does not mean the bot has agency.

But the software knows who she is, and why is software anywhere allowed to generate semi nude images of random women?

Guns don’t kill people but a lot of people in one country get killed with guns.

In general, why is software anywhere allowed to generate images of real people? If it’s not clearly identifiable as fake, it should be illegal imo (same goes for photoshop).

Like I’d probably care much less if someone deepfaked a nude of me than if they deepfaked me at a pro-afd demo. Both aren’t ok.

You can do this with your own AI program which is complicated and expensive or Photoshop which is much harder but you can’t with openAI.

Making it harder decreases bad behaviour so we should do that.

I don’t know the specifics for this reported case, and I’m not interested in learning them, but I know part of the controversy with the grok deep fake thing when it first became a big story was that Grok was starting to add risqué elements to prompted pictures even when the prompt didn’t ask for them. But yeah, if users are giving shitty prompts (and I’m sure too many are), they are equally at fault with Grok’s devs/designers who did not put in safeguards to prevent those prompts from being actionable before releasing it to the public

PREDATORS used Grok to deepfake…

A predator put out grok.

Sure, and that’s bad too.

But Elon isn’t making those pervs do pervy things. He’s just making it easier.

Sure, and that’s bad too.

If being a producer of child pornography earns a “sure, that’s bad too” I’m going to just assume you are cool with the whole thing.

The person producing the child porn is infinitely worse.

You got lost buddy. “Sure that’s bad too” was referring to the makers of Grok.

I point of fact my first response was exactly about the predators using Grok.

Hope this helps.

Nope, I got it, I just am now realizing there is something very wrong with you.

You are letting the cp peddler off lighter than it’s users. That means that you are morally deficient. That is the point I was trying to make earlier.

Hope this helps.

Go to my original post and point to where I said anything about letting anyone off. Actually said it. Not didn’t respond the way that you apparently need people to respond to make you comfortable.

You didn’t recently come here via Reddit by chance, did you? Because this is absolutely the most Reddit coded response I’ve seen lately.

When a simple statement gets attacked for not including EVERY possibility for an “um akshually” guy. ::orbital eye roll::

Were you truly not able to deduce that if I made the statement about PREDATORY MEN who used Grok that this included the PREDATORY MEN who enabled that use by Grok as well?

Because “yes that’s bad too” is only the opposite of that in YOUR head, buddy.

I’m not dumbing down how I write and I expect a modicum of intelligence and discernment to elicit anything more than derision on my part. Especially accusations like yours so massage your holier-than-thou attitude.

So just let me know how much help you need understanding simple statements of fact next time before you get yourself all hot and bothered.

That goes for the morons who apparently agreed with this guy.

Jesus.

Ok, cool story.

Guns don’t kill people, etc etc…

Right, because they pull their own triggers, etc., etc…

Edit: To clarify to make absolutely certain you can’t possibly think I’m agreeing with you, I’m not complaining about Grok, although chatbot “AI” are stupid fucking tools for tools, I’m comlaining about the PREDATORS who use chatbots to do sexual deviancy shit. Not unlike the ammosexuals/ICE agents who fantasize about using their ARs to kill women.

I guess I misinterpreted your comment. I thought you were trying to imply that all of the responsibility rested on the badly-behaving users and that the makers of Grok had no responsibility to prevent sexual harassment and CSAM on their platform.

That is exactly what they are saying.

Another weirdo who thinks “and that’s bad too” is a statement of absolution for predators and those who abet them.

Is that because of No Child Left Behind?

Has Lemmy adopted the “um aksuually” guy Reddit standard for writing analysis and media literacy?

No, person of dubious ability to comprehend what you read, this is NOT what I am saying, this is what you want to believe that I am saying. For some bizarre ass reason.

White knighting? Red-pill accusations misdirect?

Please, feel free to explain your logic and thoughts on this.

I am genuinely curious.

Nope. Just think that we should all recognize the root evil here is the Nazi billionaire that is selling a child porn subscription service.

You know. Because Nazi billionaires selling child porn subscription services is a real actual terrible thing.

And what makes you think “and that’s bad too” doesn’t? Seriously. Explain your work.

Was there not enough histrionics or wringing of hands and gnashing of teeth for you?

So explain your logic and thoughts about why you think EVERY FUCKING RESPONSE needs to cater to your whims?

The entire fucking planet knows that Nazi trillionaires selling a service that can be used for child porn, at least be accurate, is BAD. Are you serious right now?

DO you hang around so many Nazi pedos that this needs to be constantly reiterated lest you forget?

Holy moly.

The makers of Grok are responsible for all of its sins, yes.

But mostly I was referring to the men who use software like this for those purposes.

But this is nothing new. I’m old enough that I predate even using Photoshop for things like this.

No, the problem is always perverted men.

And for the incels, no, women don’t do shit like that.

I don’t disagree. But my point is that, if creepy, predatory men are so ubiquitous, so shameless, and such a known factor (which anyone with half a brain should agree they are) then isn’t the fastest and most efficient way to minimize harm removing the tools of abuse from the hands of the abusers?

Sigh.

So this is because I didn’t paint with a broad brush and create a plan of action or are we tarring and feathering ANYONE associated with AI now (which I’m totally on board with, btw, just not something I bother writing in every statement I made)?

The most efficient and effective way to minimize hard, in this case, would be chemical castration of all such abusers everywhere. Anything less is just cosplaying justice.

Should I castigate you for NOT making that statement? Does that make you a horrible person who supports sex offenders? Don’t you feel guilty for NOT including that in EVERY reply or post you make?

See how weird that is?

I find it so odd that because I made a statement about the predatory men who fucking use AI to do this you weirdos think this means that doesn’t include Musk and his team who aid and abet them.

Like it’s WEIRD that some of you jumped to that conclusion. It’s not just you. I’ve been posting on the internet since the mid 90s and late stage Reddit was the first time I encountered that kind of thing and I’m not sure where it came from. Maybe it was always there and I just never encountered it until then.

But DAMN it is WEIRD.

I mena, there’s writing analysis and media literacy, and then there’s whatever this wild speculation shit is.

Do better man.

The most efficient and effective way to minimize hard, in this case, would be chemical castration of all such abusers everywhere. Anything less is just cosplaying justice.

Those drugs are actually of dubious utility unless you are just seeking to cause medical harm to people.

And it has absolutely no bearing on AI. No amount of chemicals will stop grok, and from what I hear Elon’s genitals may already be mangled and useless.

You stop this sort of thing at the source.

I am not a musk fan but jeezus pleezus…If I bought a Henkel’s chef knife and killed someone would the headline be “Henkel Killed Someone”? We need to rid ourselves of big media and bring independent journalism back. The ones who value facts and try to get as close to the truth as possible, not the “hate clicks for money” cabals.

If the knife was sold to you with the knowledge that you were going to commit murder, the person who sold it to you could be up for a felony murder charge, or as an accessory.

There’s also product liability law. Meaning that if a company sells or proliferates a product without proper safety protocols in place and it causes harm that can be foreseen, they are liable.

“We take action against illegal content on X, including Child Sexual Abuse Material (CSAM), by removing it, permanently suspending accounts, and working with local governments and law enforcement as necessary,” X’s “Safety” account claimed that same day.

It really sucks they can make users ultimately responsible.

I think it’s wrong that they carry no liability. At the end of the day they know the product can be used this way, they haven’t implemented any safety protocols to prevent this, and while the users prompting Grok are at fault for their own actions, the platform and AI LLM are being used to facilitate it where other AI LLM’S have guard rails to prevent it. In my mind that alone should make them partially liable.

And yet they leave unfettered access to the tool that makes it possible for predators to do such vile shit.

But will it show Elon’s baby weenie?

In terms of how this is reported, at what point does this become streisanding by proxy? I think anything from the Melon deserves to be scrutinized and called out for missteps and mistakes. At this point, I personally don’t mind if the media is overly critical about any of that because of his behavior. And what I’m reading about Grok is terrible and makes me glad I left Twitter after he bought it. At the same time, these “put X in a bikini” headlines must be drawing creeps towards Grok in droves. It’s ideal marketing to get them interested. Maybe there isn’t a way to shine the necessary light on this that doesn’t also attract the moths. I just think in about ten years’ time we will get a lot of “I started undressing people against their will on Grok and then got hooked” defenses in court rooms. And I wonder if there would’ve been a way to report on it without causing more harm at the same time.

deleted by creator