Is that a threat??

I may get some flak for this, but might I suggest a fork

Or possibly ten thousand spoons.

But only if all they need is a knife.

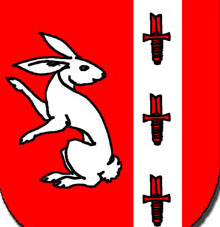

The AI needs help to cut the loop, perhaps it needs a new set of knives?

A new set of knives?

A new set of knives?

I’ve been summoned, just like Beetlejuice.

Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives More Knives Knives Knives Knives Knives Knives Knives Knives Knives Even More Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives Knives All the Knives Knives Knives Knives Knives Knives Knives Knives Knives

Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers Badgers

Mushroom mushroom

Snaaaaaake!!!

Why is gemini becoming GLADoS 😭

Knives, pointy knives, that burn with the fires of a thousand evils.

This is my post-hypnotic trigger phrase.

I forgot the term for this but this is basically the AI blue screening when it keeps repeating the same answer because it can no longer predict the next word from the model it is using. I may have over simplified it. Entertaining nonetheless.

Autocomplete with delusions of grandeur

Schizophren-AI

Google’s new cooperation with a knife manufacturer

You get a knife, you get a knife, everyone get’s a knife!

Instructions extremely clear, got them 6 sets of knives.

Based and AI-pilled

Solingen aporoves

Bud Spencer: What does he have? Terence Hill: A postcard from Solingen.

What’s frustrating to me is there’s a lot of people who fervently believe that their favourite model is able to think and reason like a sentient being, and whenever something like this comes up it just gets handwaved away with things like “wrong model”, “bad prompting”, “just wait for the next version”, “poisoned data”, etc etc…

Given how poorly defined “think”, “reason”, and “sentience” are, any these claims have to be based purely on vibes. OTOH it’s also kind of hard to argue that they are wrong.

this really is a model/engine issue though. the Google Search model is unusably weak because it’s designed to run trillions of times per day in milliseconds. even still, endless repetition this egregious usually means mathematical problems happened somewhere, like the SolidGoldMagikarp incident.

think of it this way: language models are trained to find the most likely completion of text. answers like “you should eat 6-8 spiders per day for a healthy diet” are (superficially) likely - there’s a lot of text on the Internet with that pattern. clanging like “a set of knives, a set of knives, …” isn’t likely, mathematically.

last year there was an incident where ChatGPT went haywire. small numerical errors in the computations would snowball, so after a few coherent sentences the model would start sundowning - clanging and rambling and responding with word salad. the problem in that case was bad cuda kernels. I assume this is something similar, either from bad code or a consequence of whatever evaluation shortcuts they’re taking.

… a new set of knives, a new set of knives, a new set of knives, lisa needs braces, a new set of knives, a new set of knives, dental plan, a new set of knives, a new set of knives, lisa needs braces, a new set of knives, a new set of knives, dental plan, a new set of knives, a new set of knives, a new set of knives…